#The Importance of Open-Source Large Language Models (LLMs)

Large Language Models (LLMs) are foundational models trained on vast text datasets, encompassing web pages, books, and articles. They are designed to generate text, translate languages, automate tasks, and create various types of content. Proprietary LLMs are typically owned by companies and are only accessible through private APIs. The most prominent proprietary LLMs include OpenAI's ChatGPT, Anthropic's Claude, and Google's PaLM 2 used in Bard chatbot.

However, people have begun to recognize that these proprietary LLMs can impose restrictions on their usage. These LLMs tend to be centralized within a few major tech companies, raising concerns about transparency, bias, robustness, and data privacy. In response to the need for transparency, the LLM community has developed numerous open-source or source-available LLMs. These open-source alternatives compete favorably with very large models in terms of performance. They offer individuals and organizations full control over their data and ensure ethical and legal compliance. With a modest budget and a small amount of data, you can fine-tune small LLMs to achieve impressive results.

#Preparation

Instill Cloud is the recommended platform for accessing these open-source LLMs, offering ease of use and reliability. To get started, follow the quick start guide to set up your Instill Cloud account.

#Access Pre-trained LLMs in Instill Cloud

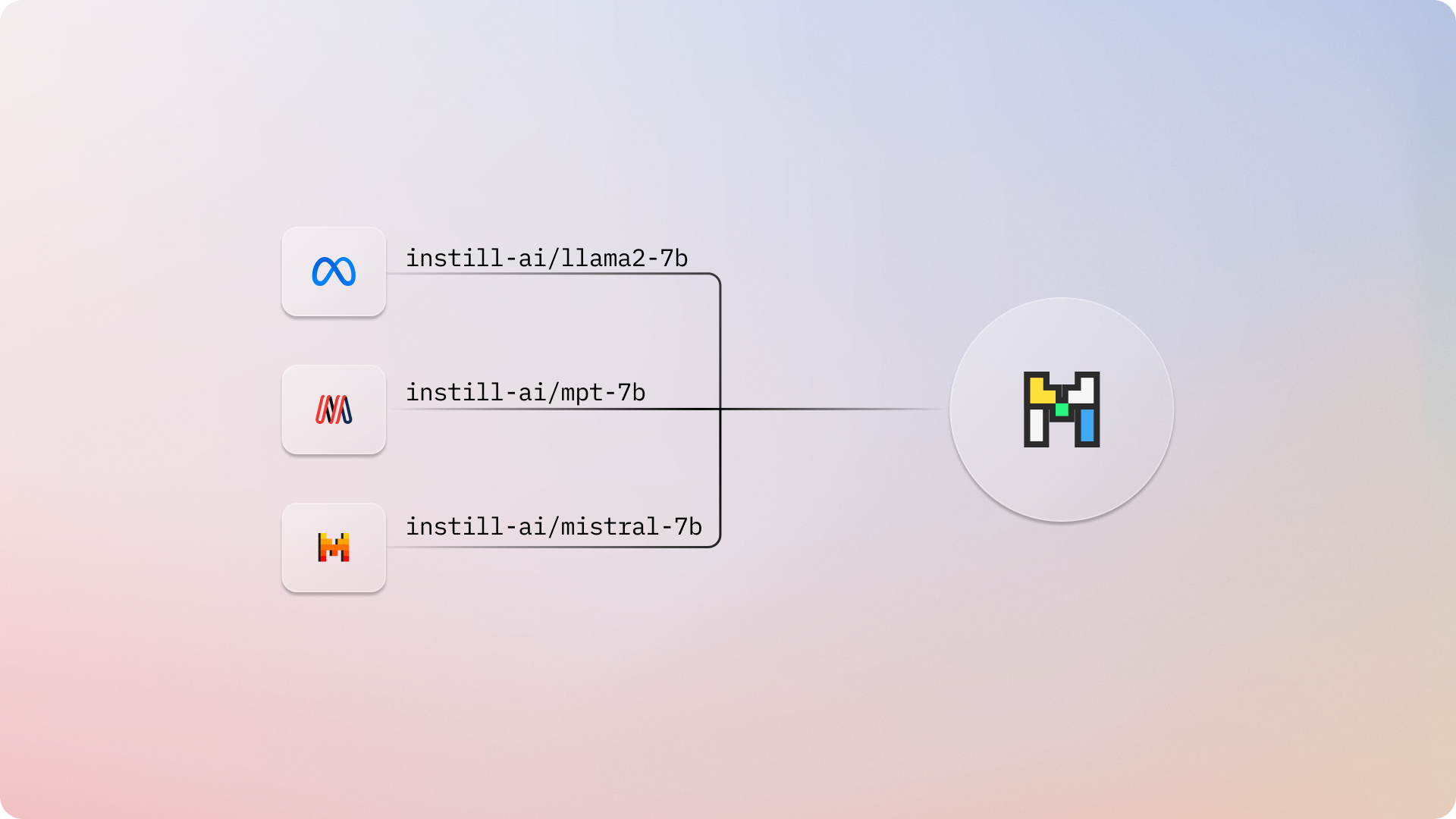

To access pre-trained AI models hosted in Instill Cloud, log in to the Console and navigate to the Model Hub page.

Here, you'll find a collection of pre-trained AI models under the instill-ai account, ready for use in your applications.

During the Open Alpha phase, accessing to these models are entirely free.

Each model in the Model Hub is designed to handle specific AI tasks. For detailed information about a specific model, click on its ID and explore the Description and Settings sections.

Below is a list of available LLMs, along with their details and links in Instill Cloud:

| LLMs | Params/[B] | Instill Model Links | Notes | License | Commercial Usage | Release Date |

|---|---|---|---|---|---|---|

| MPT-7B Base | 6.7 | instill-ai/mpt-7b | Trained from scratch on 1T tokens of text and code. It matches the quality of LLaMA-7B. MPT-7B was trained on the MosaicML platform in 9.5 days with zero human intervention. | Apache-2.0 | ✅ | May 5, 2023 |

| LLaMA-2 7B | 7 | instill-ai/llama2-7b | Meta AI released LLaMA-2, pre-trained over 2 trillion tokens with a 4096 context length. Some benchmark graphs shows it roughly ties with GPT-3.5 and performs noticeably better than Falcon, MPT and Vicuna. | LLaMA-2 | ✅ | July 18, 2023 |

| Mistral 7B | 7.3 | instill-ai/mistral-7b | Mistral AI team released Mistral 7B, the most powerful language model for its size to date. It outperforms LLaMA-2 13B on all benchmarks. | Apache-2.0 | ✅ | Sep 27, 2023 |

The Open LLM Leaderboard ranks Mistral 7B as a strong candidate for practical commercial usage among models below 13B parameters.

#Build an AI pipeline to access the LLMs

In this section, we will build an AI pipeline to compare the outputs of the LLMs served in Instill Cloud.

Step 1: Add the Instill Model connector resource

Instill VDP supports an AI connector to Instill Model where the user can import and serve custom models.

To set up Instill Model connector resource in the Console, follow these steps:

- Navigate to the Resources page on the left sidebar.

- Click on + Add Resource, add select Instill Model.

- Provide a unique ID for the resource. Here we use ins-model. Optionally, you can add a description.

- Fill in the API Token. To access models in Instill Cloud, enter your Instill Cloud API Token. You can create and find your tokens by visiting the Settings > API Tokens page.

- Fill in the Server URL with https://api.instill.tech.

- Click Save.

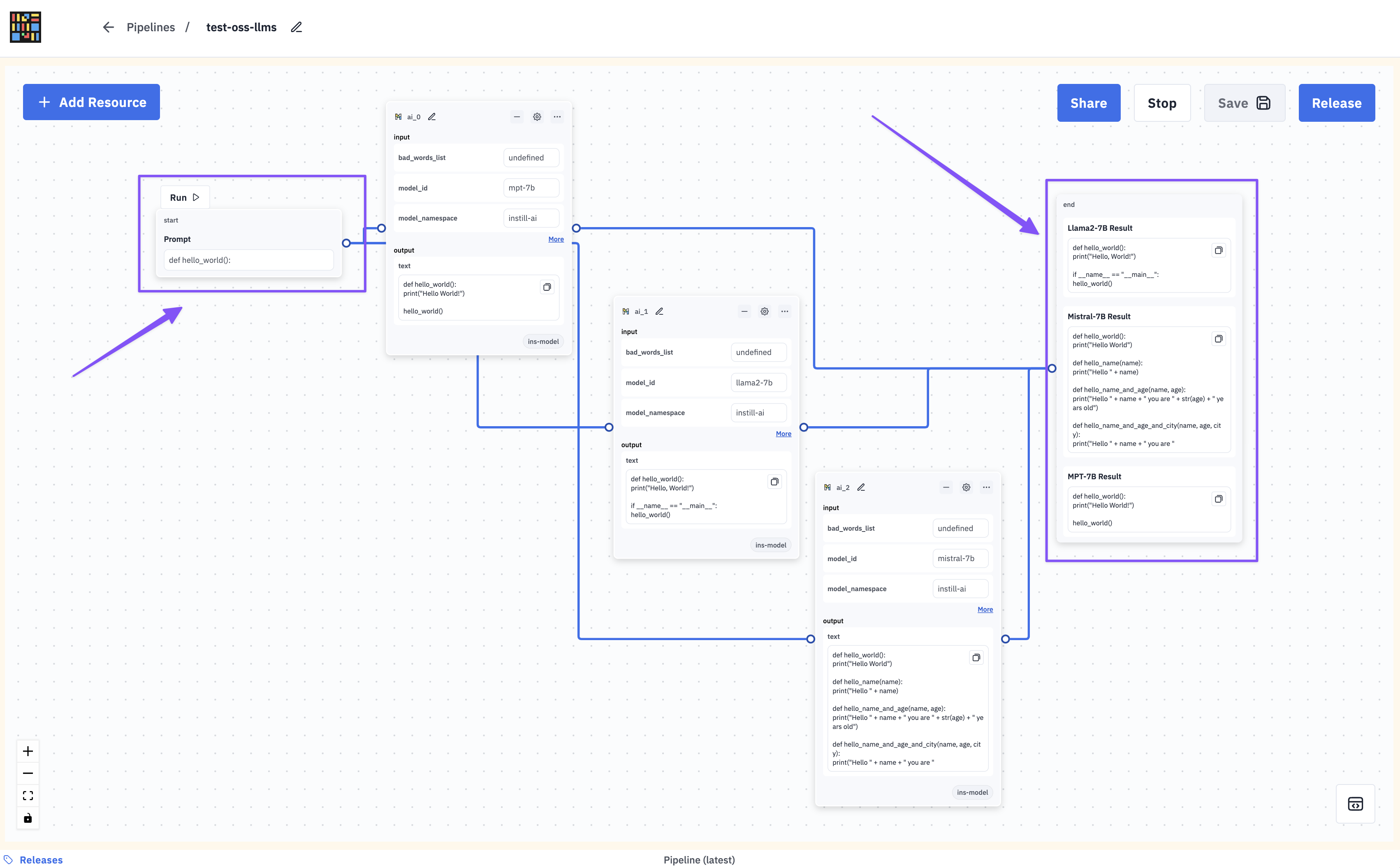

Now, head to the Pipelines page on the left sidebar. To create your pipeline through the Console, click on + Create Pipeline. This will redirect you to the no-code pipeline builder. The pipeline will be assigned a randomly generated unique ID. You can change it on the right, for example, test-oss-llms. On the canvas, you can drag and drop connectors and operators to construct your pipeline.

Step 2: Build a VDP pipeline using the no-code pipeline builder

The Start component serves as the starting point of a VDP pipeline, where you can define the input data for your applications.

To set up a Start component:

- Locate the start component on canvas

- Click Add Field + and select Text. Fill in the Title with Prompt and Key with prompt.

- Click Save.

This configuration requires one input: a text prompt for all the open-source LLMs.

To configure an Instill Model connector:

- Click + Create Connector and choose ins-model from existing resource. This will add a OpenAI connector

ai_0to the canvas. - Click the ⚙️ icon on the

ai_0to open the configuration panel on the right-hand side. - Select TASK_TEXT_GENERATION from the dropdown of the Task field.

- Set Model ID to choose the model to use, e.g., mpt-7b.

- Set Model Namespace to instill-ai for the namespace (username) of the model to use.

- In the Prompt field, specify the text prompt

{start.prompt}. - Specify a Seed with 0 for a randomization.

- Click Save.

The Instill Model connector will automatically link to the Start component

since we have used {start.prompt} in the Instill Model connector configuration.

The {} placeholders are used to reference values from previous connectors.

Unlike the {{}} placeholders that output text string, {} retains the type

of the referenced value.

With the above steps, we have set up the MPT 7B model. To set up the other two models, LaMA-2 7B and Mistral 7B:

- Click the

···icon on theai_0and select Duplicate to create another Instill Model Connector, namedai_1, on the canvas. - Click the ⚙️ icon on the

ai_1to open configuration right panel. - Update Model ID from mpt-7b to llama2-7b.

- Click Save.

You can repeat the same process to duplicate another component, ai_2, and update the Model ID to mistral-7b to set up Mistral 7B.

The End Component is used at the end of a VDP pipeline to receive the output of the pipeline being triggered.

To set up an End component:

- Find the end component on canvas.

- Click Add Field + and fill in the Title with MPT-7B Result, Key with mpt_7b_result and Value with

{ai_0.output.text}. - Click Add Field + and fill in the Title with Llama2-7B Result, Key with llama2_7b_result and Value with

{ai_1.output.text}. - Click Add Field + and fill in the Title with Mistral-7B Result, Key with mistral_7b_result and Value with

{ai_2.output.text}. - Click Save.

You will see that the End component is automatically linked to multiple components: ai_0, ai_1 and ai_2.

Click Save on the top right corner.

#Run the pipeline

🎉 Congratulations! Your pipeline is all set up and ready to go. Now, simply click on the Test button located in the top right corner. You can now put your pipeline to test using real data. For instance, let's input the Prompt as

Prompt: def hello_world():

Click Run.

The End component will show the text generation result of each LLM:

Please give it a try, but note that these LLMs models are base models and are not fine-tuned for chat-like dialogue generation.

#Build Your App using the Pipeline

To triggering the pipeline, you need a valid API token. If you don't have one yet, follow these steps:

- Click on Settings on the left sidebar

- Navigate to the API Tokens page

- Click Create Token and give it an ID

The API tokens do not expire, so keep them safe. If a token is compromised, select it and click Delete. Be cautious, as this operation cannot be undone, and apps using the token will stop working.

VDP automatically generates a dedicated trigger endpoint for each pipeline to process data. To trigger the pipeline, follow these steps:

- Click the bottom right icon to display the Pipeline toolkit

- Copy the cURL request in the Trigger Snippet section and pass your API token as a Bearer token

<api_token>in the Authorization header - Adjust and ingest your data accordingly in the example request

Here are the triggering pipeline example cURL command and example with instill-sdk Python Package:

Where <user-id> represents your username.

You should receive the following response:

{ "outputs": [ { "llama2_7b_result": "Once upon a time, there was a little girl who loved to read. She loved to read so much that she would read anything she could get her hands on. She would read the cereal box, the back of the cereal box, the back of the cereal box again, and then she would read the cereal box again. She would read the cereal box so much that she would read the cereal box until she was sick of reading the cereal box.\n", "mistral_7b_result": "Once upon a time, there was a little girl who loved to read. She loved to read so much that she would read books over and over again. She would read books about princesses, books about fairies, books about mermaids, and books about unicorns. She would read books about dragons, books about wizards, books about witches, and books about goblins. She would read books about knights, books about kings, books about queens, and books about cast", "mpt_7b_result": "Once upon a time, there was a little girl who loved to play with her dolls. She would dress them up in pretty clothes and put them to bed. She would play with them and talk to them. She would even take them to the park and play with them.\nOne day, the little girl was playing with her dolls in the park. She was having a great time, until she saw a boy playing with his toy cars. She was jealous of the boy and wanted to play with his toy cars" } ], "metadata": { "traces": {} }}

#What's Next?

Our journey with Instill Model is just beginning! We have exciting plans on our roadmap, including the continuous deployment of cutting-edge models. Our next target is to provide features for fine-tuning these open-source models on specific tasks with custom data. Stay tuned for more updates!

If you're interested in a quick demo, please feel free to book a meeting with us. Or, please join our Discord to chat online. We'd love to hear from you! 👐